CSV Export#

Konfuzio provides possibility to export data in CSV format. Currently, two types of exports are supported: The export of Extraction results, which contains information about the extraction of Documents, and the export of evaluation results, which contains detailed Annotation-level information about the evaluation of test and train Documents.

Export results#

To export results, click DOCUMENTS. Select the Documents whose data you want to download by ticking them. You can select up to 100 Documents. If you select multiple Documents here, they will be combined into one CSV file. Select the action “get all data as a CSV file” in the action tab and click on “go”. The download of the CSV file should start automatically. CSV files can be used with spreadsheet programs such as Microsoft Excel, Google Sheets, etc.

Open CSV file in Excel#

Due to the use of different character sets in Excel and CSV files, formatting may be displayed incorrectly when importing CSV files into Excel. To solve this problem, proceed as follows.

Open Microsoft Excel

Click on the “Data” option in the menu bar.

Then select the “Import from Text/CSV” option.

In the dialog that appears, navigate to the location of the CSV file you want to import. Click on the file name and then click Import

The CSV import can now be configured. Select 65001: Unicode (UTF-8) as the file origin and semicolon as the separator. Then click on Load to display the file correctly.

Sample CSV Output#

Assume the following structure

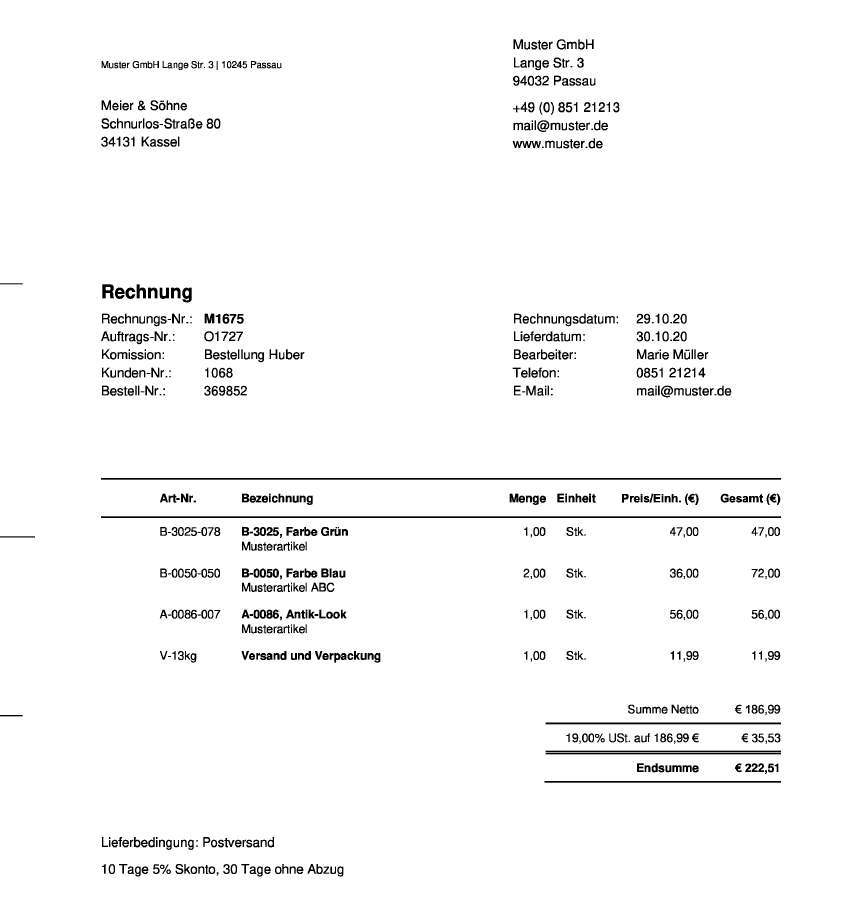

Assume the AI is trained and you uploaded the following invoice:

The header and the first row of the exported CSV looks like the following table.

CSV HEADER |

document |

document_id |

document_category |

Label Set |

Label Set_number |

Invoice item__quantity |

Invoice item__product name |

Invoice item__unit price |

Invoice item__subtotal |

Gross amount |

|---|---|---|---|---|---|---|---|---|---|---|

FIRST ROW |

Sample invoice demo.pdf |

108679 |

Invoice |

Invoice item |

1 |

1 |

B-3025, Farbe Grün Musterartikel |

47 |

47 |

222,51 |

Explanation |

Name of the file |

Unique ID to identify the document |

Category of the document recognized by the AI |

Defined invoice items |

Assignment to an invoice item |

Quantity of service in an invoice item |

Description of the service in an invoice item |

Unit price of the service of an invoice item |

Subtotal of an invoice item |

Gross amount of the invoice |

Sample CSV Output without Label Sets#

If you only use one Label Set in your Category and don’t create any additional Label Sets, the CSV Export will have a different format. Each row in the CSV will represent one Annotation.

Evaluation export#

It is possible to export results of evaluation of Extraction AI into the CSV file. To do it, go to the Extraction AIs and tick the AI that you want to export evaluation for. In the dropdown menu above, select the option “Get evaluation as CSV file”. A downloaded file will have the following structure. Each line is a single Span, meaning that if an Annotation consists of a single Span, it will take 1 line, and if it consists of two, it will take 2 lines, and so on.

CSV HEADER |

table_id |

id_ |

revised |

id_local |

label_id |

duplicated |

start_offset |

end_offset |

is_correct |

is_matched |

category_id |

document_id |

label_set_id |

true_positive |

false_negative |

false_positive |

is_correct_id_ |

label_threshold |

is_correct_label |

annotation_set_id |

clf_true_positive* |

document_id_local |

clf_false_negative |

clf_false_positive |

id_local_predicted |

label_id_predicted |

confidence_predicted |

duplicated_predicted |

end_offset_predicted |

is_correct_label_set |

document_id_predicted |

label_set_id_predicted |

start_offset_predicted |

tokenizer_true_positive** |

tokenizer_false_negative |

tokenizer_false_positive |

above_predicted_threshold |

label_threshold_predicted |

annotation_set_id_predicted |

document_id_local_predicted |

is_correct_annotation_set_id |

label_has_multiple_top_candidates_predicted |

dataset_status |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

SAMPLE ROW |

0 |

1231 |

FALSE |

1 |

2 |

FALSE |

1 |

5 |

TRUE |

TRUE |

5 |

4 |

3 |

TRUE |

FALSE |

FALSE |

TRUE |

0,1 |

TRUE |

4 |

TRUE |

3 |

FALSE |

FALSE |

TRUE |

2 |

0.6 |

FALSE |

5 |

TRUE |

4 |

3 |

1 |

TRUE |

FALSE |

FALSE |

TRUE |

0.9 |

4 |

3 |

TRUE |

FALSE |

Training |

Explanation |

An ID of the row in the exported file |

Global ID of the Annotation |

If Annotation is revised or not |

ID of the Annotation within this evaluation pipeline |

ID of the Label to which this Annotation belongs to |

If there are other rows similar to this |

Beginning of an Annotation |

End of an Annotation |

If an Annotation is correct |

If the predicted Annotation was matched to the original Annotation by Label/Label Set ID |

ID of Category of the Document |

ID of the Document |

ID of the Label Set to which the Label of this Annotation belongs to |

If Annotation was correctly predicted |

If Annotation was not matched / didn’t pass threshold / Label was not predicted |

If Annotation was above threshold but not matched / Label/Label Set/Annotation Set/ID was incorrect |

If Annotation has a valid ID |

Threshold of confidence of a Label assigned to this Annotation |

If a Label was predicted correctly |

ID of Annotation Set the Annotation belongs to |

If predicted Annotation is correct, matched, above threshold and has correct Label |

ID of the Document within this Evaluation process |

If predicted Annotation is correct and matched and Label is correct but it is below threshold |

If predicted Annotation is correct and matched but the Label is wrong |

If Annotation’s local ID is predicted correctly |

ID of a Label of a predicted Annotation |

Confidence of prediction |

If predicted Annotation was duplicated |

Predicted Annotation’s end offset |

If predicted Annotation has correct Label Set of its Label |

ID of a predicted Annotation’s Document |

ID of a predicted Annotation’s Label Set |

Predicted Annotation’s start offset |

If predicted Annotation is correct and matched and local document ID is predicted correctly |

If predicted Annotation is correct and matched and local document ID is not predicted |

If tokenizer_true_positive and tokenizer_false_negative are False and local document ID is predicted |

If predicted Annotation has confidence equal or greater to its Label’s threshold |

Threshold of a predicted Annotation’s Label |

Predicted Annotation’s Annotation Set’s ID |

Predicted Annotation’s Document’s local ID |

If predicted Annotation’s Annotation Set was correctly predicted |

If predicted Annotation’s Label’s feature of multiple top candidates was correctly predicted |

Status of a document to which the Annotation belongs to |

*clf_ prefix denotes metrics from Label Classifier. **tokenizer_ prefix denotes metrics regarding Tokenizer quality; e.g. tokenizer_true_positive means that Annotation is correct, is matched and its start and end were predicted correctly.